- #PENTAHO DATA INTEGRATION MIGRATE JOB FROM HOW TO#

- #PENTAHO DATA INTEGRATION MIGRATE JOB FROM UPDATE#

- #PENTAHO DATA INTEGRATION MIGRATE JOB FROM SOFTWARE#

- #PENTAHO DATA INTEGRATION MIGRATE JOB FROM CODE#

- #PENTAHO DATA INTEGRATION MIGRATE JOB FROM FREE#

#PENTAHO DATA INTEGRATION MIGRATE JOB FROM FREE#

Get in touch today for your FREE Business Analysis. We have helped more than 700 firms with various SugarCRM integrations and customization. Rolustech is a SugarCRM Certified Developer & Partner Firm. To sum up, Pentaho is a state of the art technology that will make data migration easy irrespective of the amount of data, source and destination software.Īre you planning to make a shift to the latest technology but facing the issue of data migration? How about you let us help you with a safe and secure migration of data? Accelerated access to big data stores and robust support for Spark, NoSQL data stores, Analytic Databases, and Hadoop distributions makes sure that the use of Pentaho is not limited in scope. Pentaho offers highly developed Big Data Integration with visual tools eliminating the need to write scripts yourself. Also, it assists in managing workflow and in the betterment of job execution. Moreover, automated arrangements to help transformations and the ability to visualize the data on the fly is another one of its stand out features. There are sufficient pre-built components to extract and blend data from various sources including enterprise applications, big data stores, and relational sources. It offers graphical support to make data pipeline creation easier.

#PENTAHO DATA INTEGRATION MIGRATE JOB FROM CODE#

This Pentaho training will help you prepare for the Pentaho Data Integration exam by making you work on real-life projects. Developers can write custom Scala or Python code and import custom libraries and Jar files into Glue ETL jobs to access data sources not natively supported by. Pentaho guarantees safety of data and simultaneously ensures that users will have to make a minimal effort and that is one of the reasons why you should pick Pentaho, but there are more! The Pentaho online training class from Intellipaat helps you learn the Pentaho BI suite, which covers Pentaho Data Integration, Pentaho Report Designer, Pentaho Mondrian Cubes and Dashboards, etc.

#PENTAHO DATA INTEGRATION MIGRATE JOB FROM SOFTWARE#

Whether you are looking to combine various solutions into one or looking to shift to the latest IT solution, Kettle will ensure that extracting data from the old system, transformations to map the data to a new system and lastly loading data to a destination software is flawless and causes no trouble. It allows you to access, manage and blend any type of data from any source. Pentaho Kettle makes Extraction, Transformation, and Loading (ETL) of data easy and safe. Pentaho can help you achieve this with minimal effort. However, shifting to the latest and state of the art technologies requires a smooth and secure migration of data.

#PENTAHO DATA INTEGRATION MIGRATE JOB FROM HOW TO#

This video demos how to run job entries in parallel with PDI to help you extract meaningful insights from your data faster.

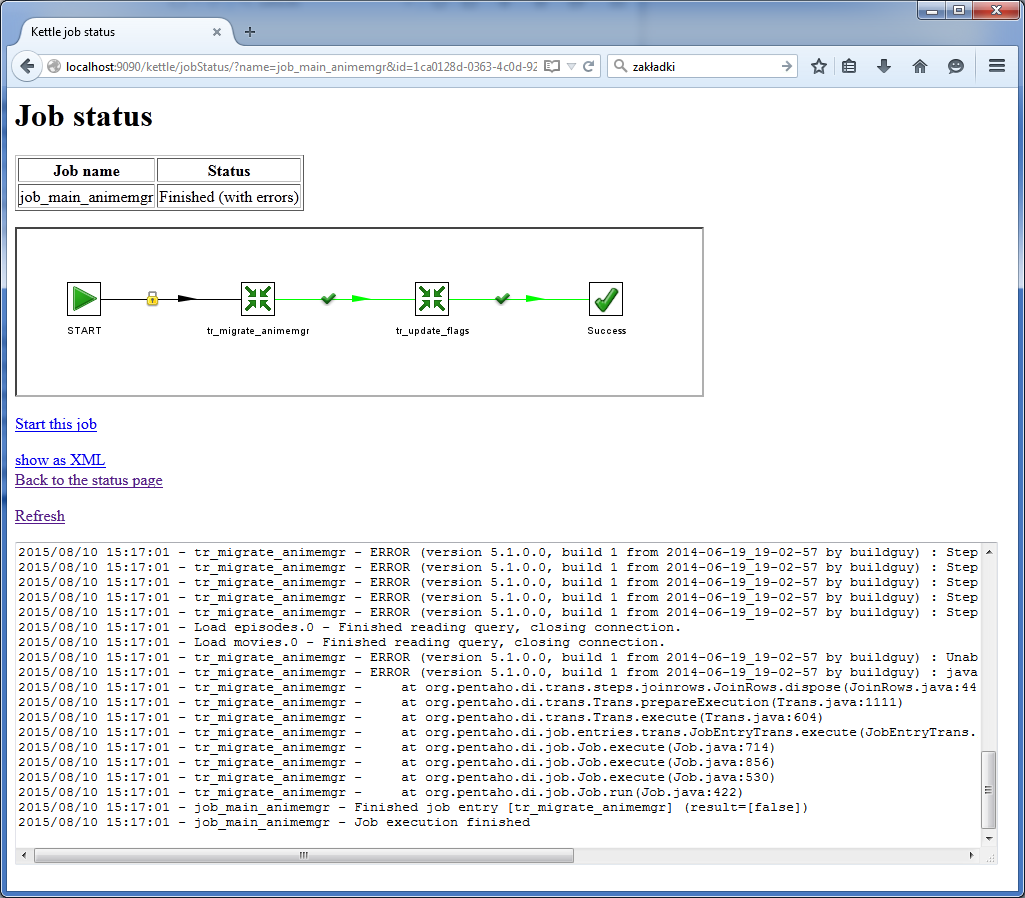

Carte - a web server which allows remote monitoring of the running Pentaho Data Integration ETL processes through a web browser.Growing focus on customer relationship management means that neither you can lose your data nor you can continue with old legacy systems. Pentaho Data Integration (PDI): Run Job Entries in Parallel.Kitchen - it's an application which helps execute the jobs in a batch mode, usually using a schedule which makes it easy to start and control the ETL processing.

#PENTAHO DATA INTEGRATION MIGRATE JOB FROM UPDATE#

Pentaho Data Integration - Kettle ETL tool

0 kommentar(er)

0 kommentar(er)